Sneak peak, early PDG/Houdini integration and database model overview.

This time last year I started writing an Asset Management System(AMS) legos for a long term personal project. The system would be central to the pipeline I am developing in tandem to support the production of the project. Having worked at all the major VFX facilities in London and studied their pipelines closely, I realised that a good AMS (including the tools required to integrate it into the pipeline and various DCCs) is key to having a robust and scalable pipeline for VFX production.

Almost all the major studios have their own implementation of an AMS, which sits at the heart of their pipeline. Some are state of the art while others are decent. However, an AMS in not a magic bullet solution, its a tool which requires to be properly designed and intergrated into the pipeline. A lot of places get this part wrong. They design an AMS but its integration into the pipeline and user interactivity is quite lacking; leading to obnoxius workflows which steal away the time artists should be spending on aesthetic decisions to make the work look better.

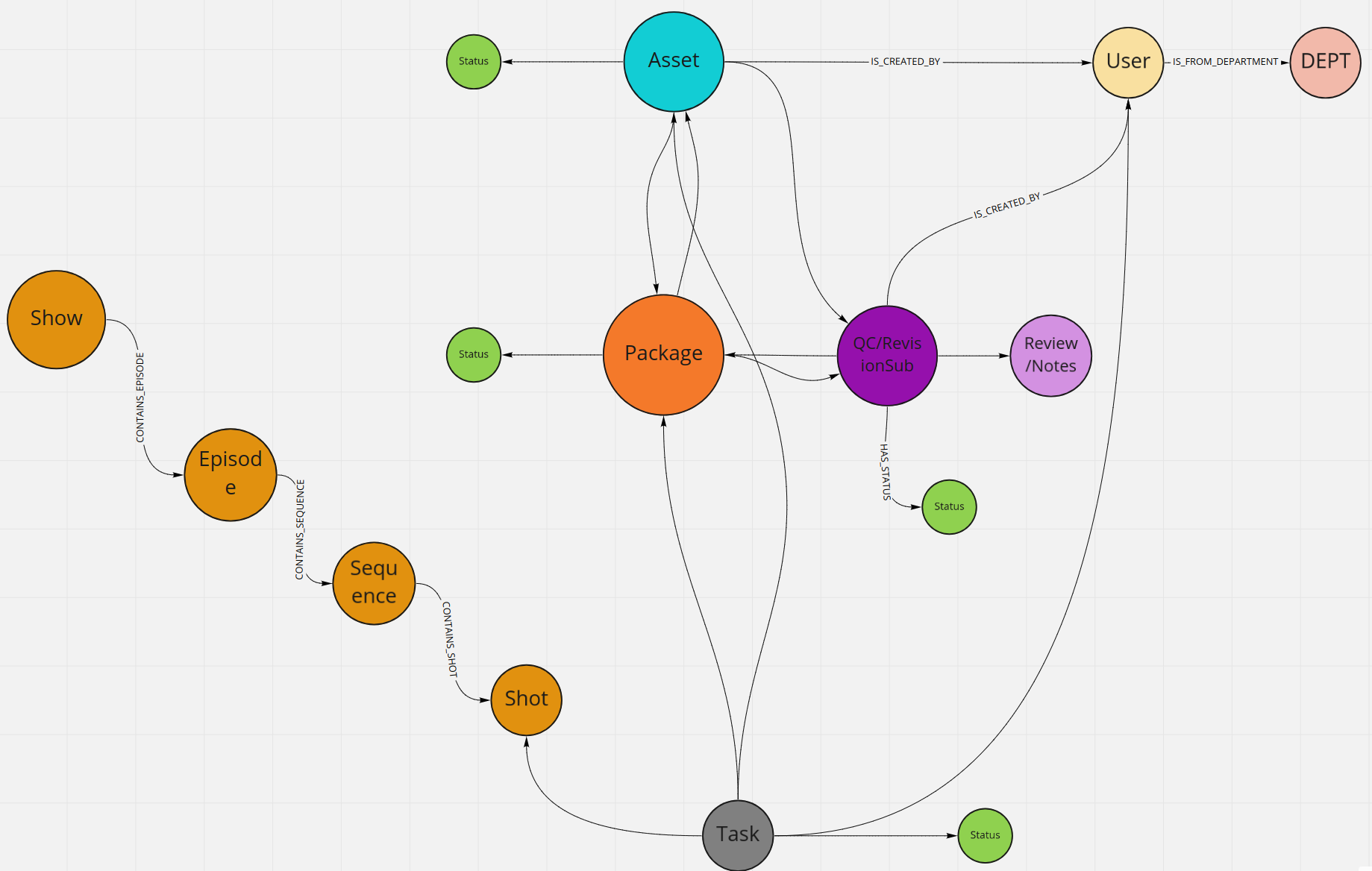

legos is an asset management system built specifically for managing assets generated during production of a VFX project. It is written completely in Python3 and leverages Neo4j’s graph database technology as its primary database workhorse. legos builds upon simple abstract constructs such as Assets, Packages, Relationships etc to quickly build and track complex dependencies.

Without going into too much detail in the interest of brevity, let me outline select few terms we will encounter in subsequent sections.

Now that we have few terms out of the way, lets have a look at a typical production use case.

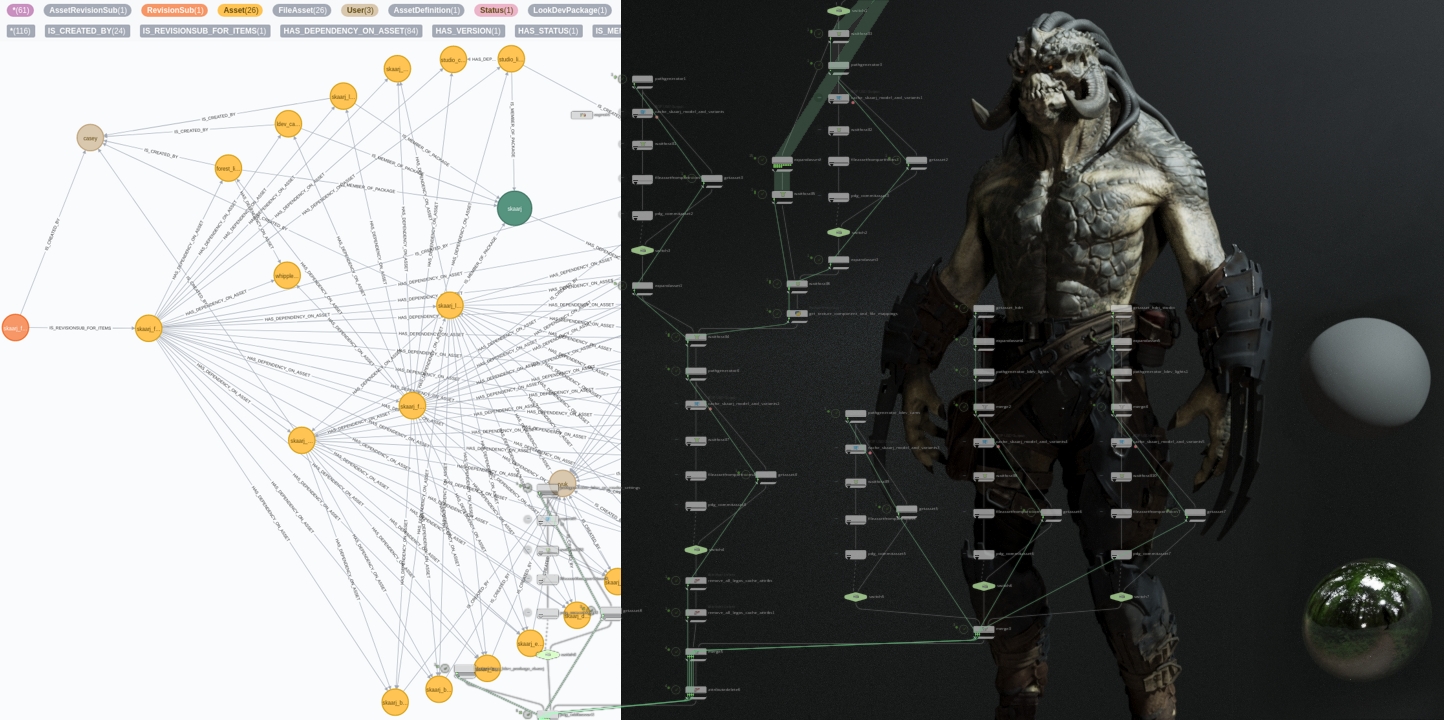

Following is a lookdev test I did using the amazing Skaarj fan-art model created by Ben Erdt.

In a typical VFX pipeline, any CG element which needs to be added to a shot, goes through numerous look development cycles with constant adjustment requests/notes from the client. It is vital that all the information that went into generating an image (or an asset), is tracked in the database to be able to regenerate the approved version, requiring correct version of each an every asset that was used to generate approved content.

Quite a few assets were used to generate the above image and we can deconstruct it into the following ingredients:

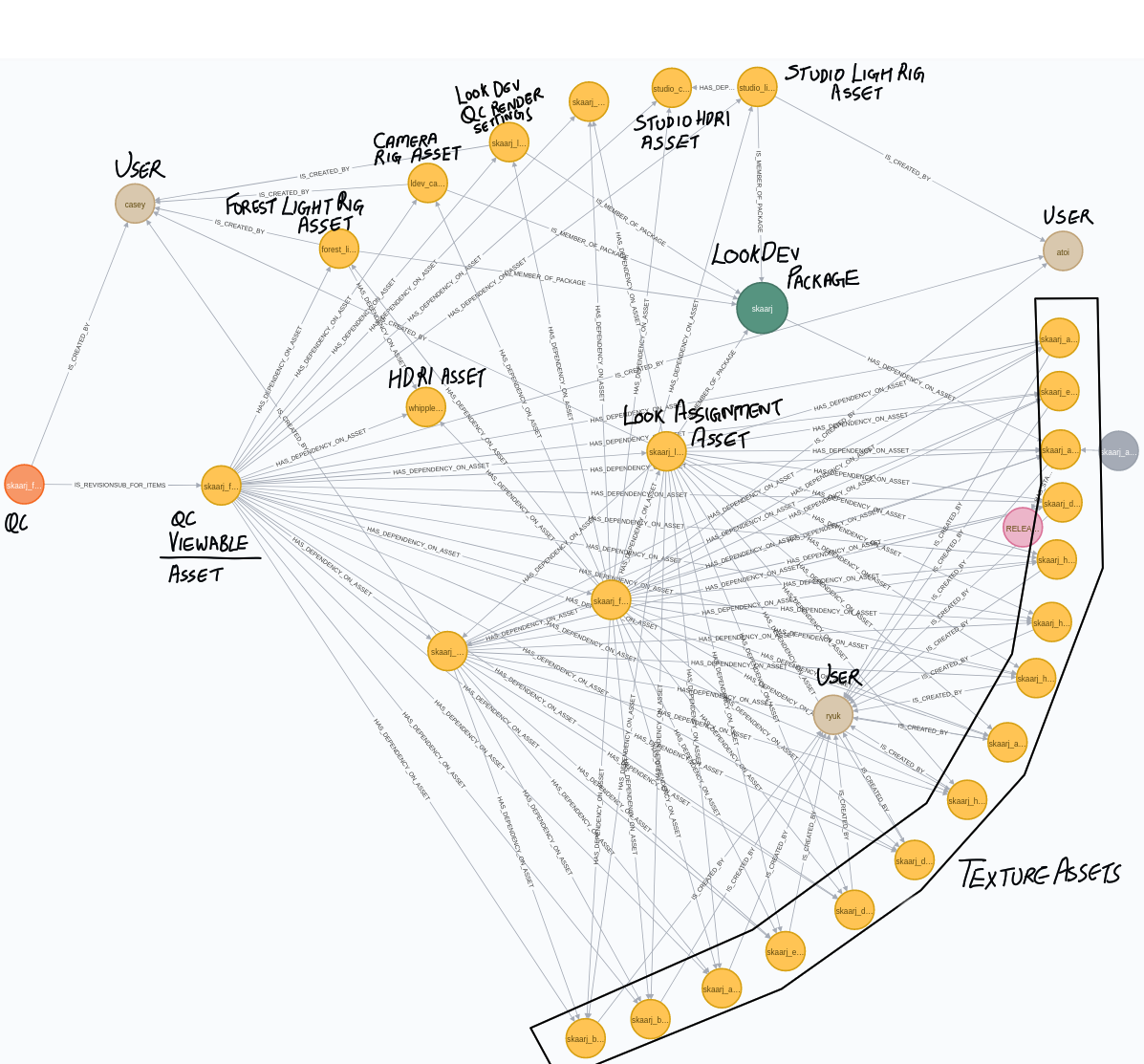

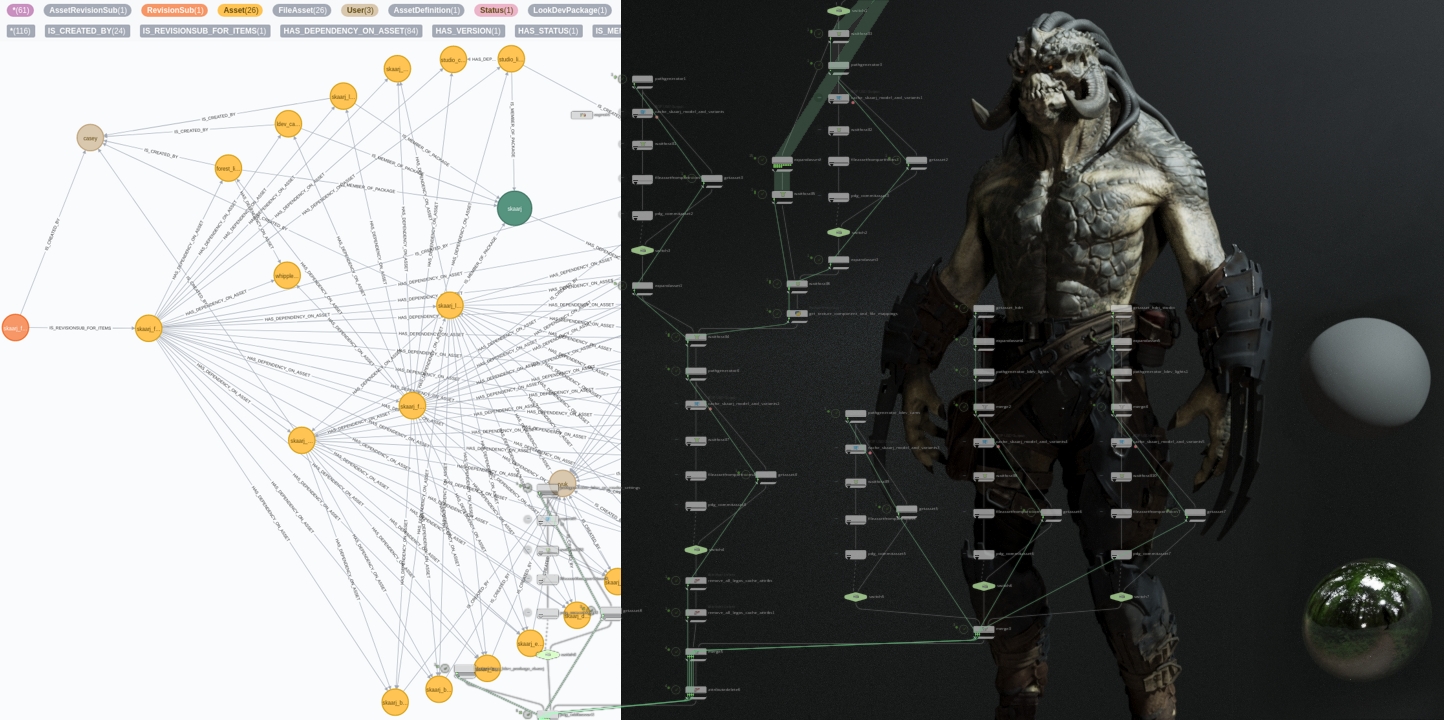

In the above image (Figure 1), I did not use the Skaarj rig to pose the character or add any animation so there are no dependencies on those components. The screenshot below (Figure 2) shows these components and the relationships they have with each other.

All of the above content is generated by artists in separate departments, using different/specialized DCCs. These assets are then checked into the database. In the context of above example (QC Render in Figure 1), the model and textures were created by Ben Erdt which basically meant that the data had to be ingested into legos. This would then bring the data into the AMS.

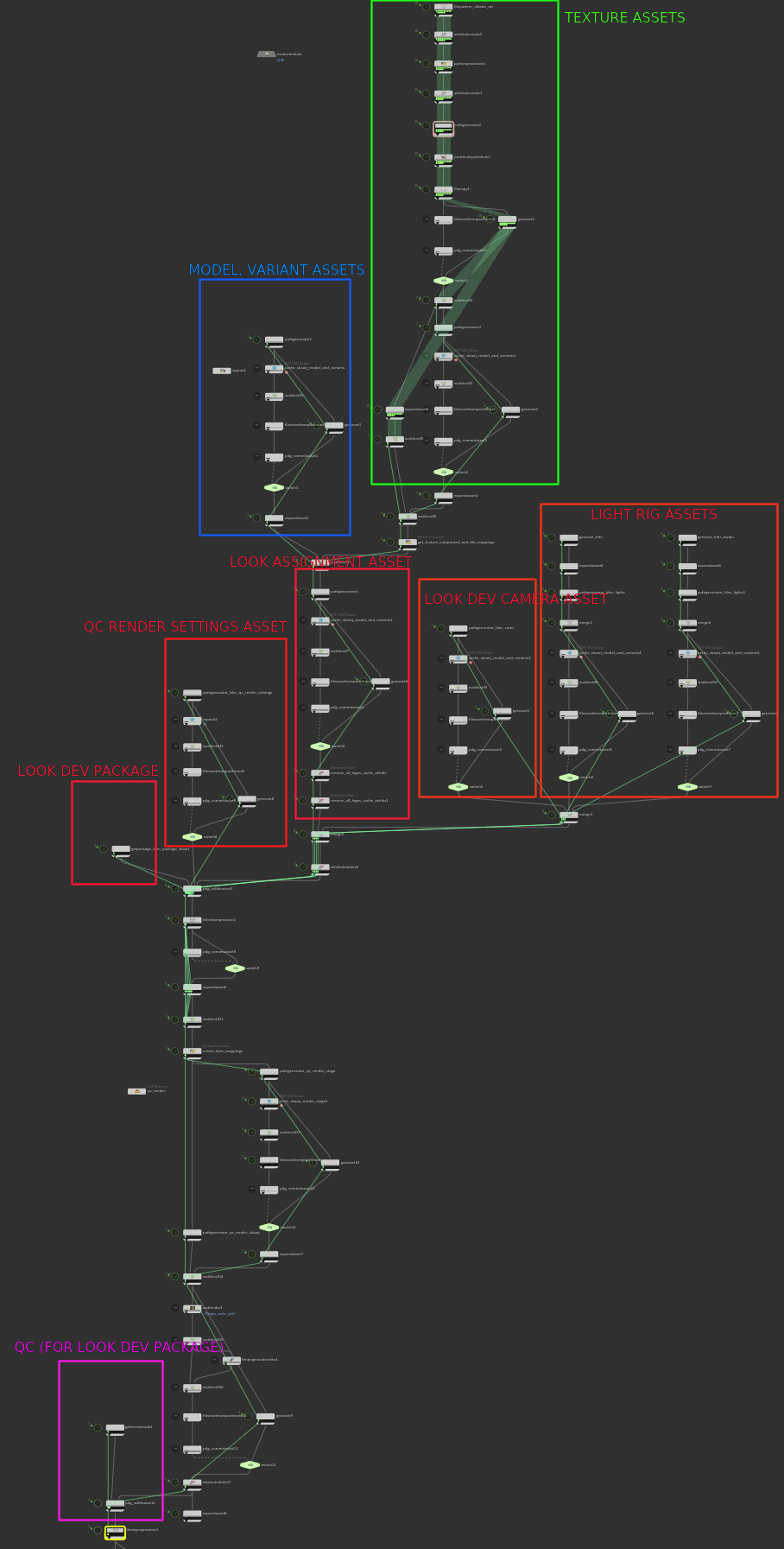

This process is highlighted by the green box in Figure 3. Additionally, the textures are converted into ACESCG colourspace and converted to .rat files which are optimised for Houdini and support mipmaps.

Part of the graph highlighted in blue (Figure 3) shows the stage where the models and any model variants are generated and then published into the database (in our case, the model was ingested and published into the database). This is typically done by an artist from the Modelling department.

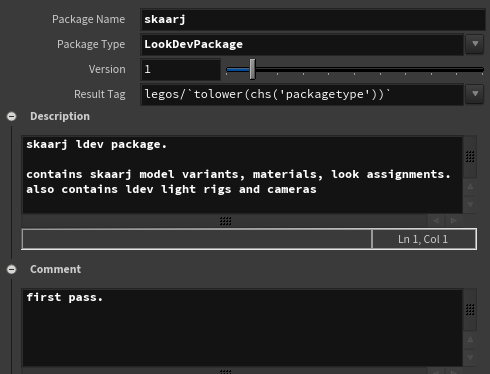

Look development stages are highlighted in Figure 3 in red. A typical lookdev workflow is to calibrate and/or build the materials in a neutral studio lighting environment while constantly checking the look in the intended lighting environments (forest, indoors, sunset etc). Its not uncommon for each hero character/asset to have a dedicated lookdev environment. In the above example, we do this by using a LookDevPackage. This LookDevPackage contains all the assets which decide the look of a CG element, including the model. It also contains lookdev cameras and light rigs which are used while developing the look and is meant to be self sufficient; meaning, if you were to import Skaarj LookDevPackage into a shot, and hit render, you would get the same image as our QC render above (Figure 1).

This can also be observed in Figure (2) above. All the assets are shown as yellow nodes, LookDevPackage in the node in green. If you look closely, the relationships clearly show that the Skaarj LookDevPackage contains 2 light rigs (a studio lighting env and a forest env), a camera rig (which may contain multiple cameras for closeup or mid range view and different profiles), materials, material bindings, Skaarj model and QC render settings asset.

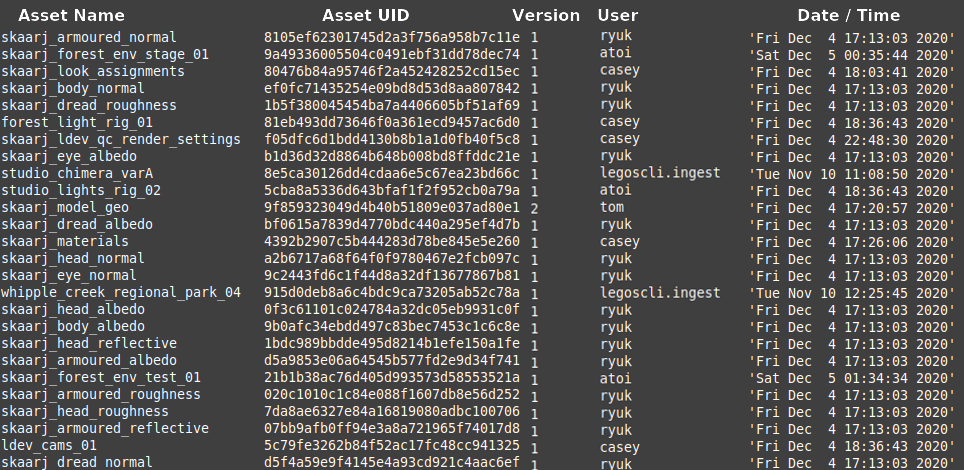

The table below (Figure 4) shows all the assets which were used to render the QC for the Skaarj LookDevPackage.

To generate an image same as Figure (1), all we need to do now is import that specific version of the Skaarj LookDevPackage and render it.

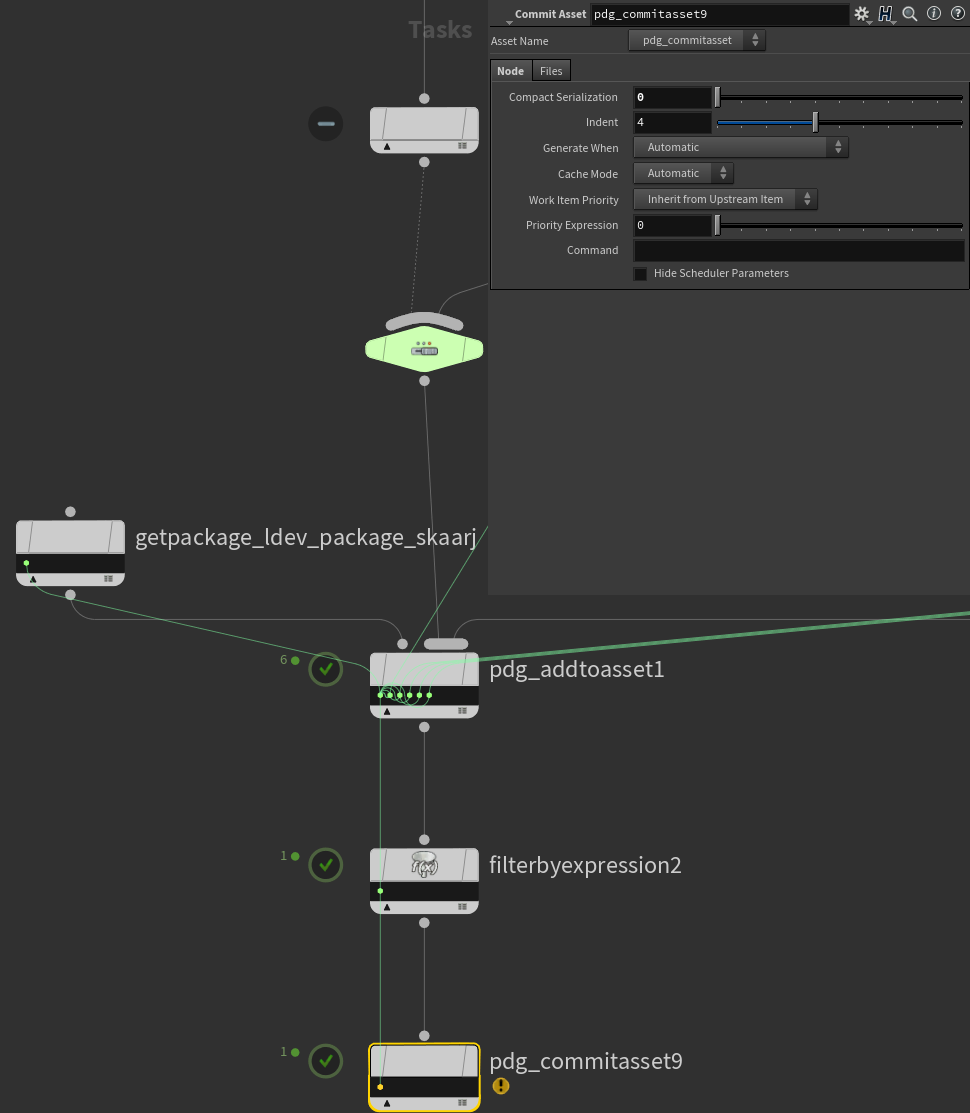

legos presently is quite well intergrated with Houdini’s Task Operators (TOPs), providing all the core utilities to push data into and also pull data from the database.

Following screenshot shows UI for the Get Package TOP which basically pulls a Package from the database.

Following screenshot shows section of the graph which was used to generate the Skaarj LookDevPackage used for rendering the character using Houdini’s new rendering engine Karma.

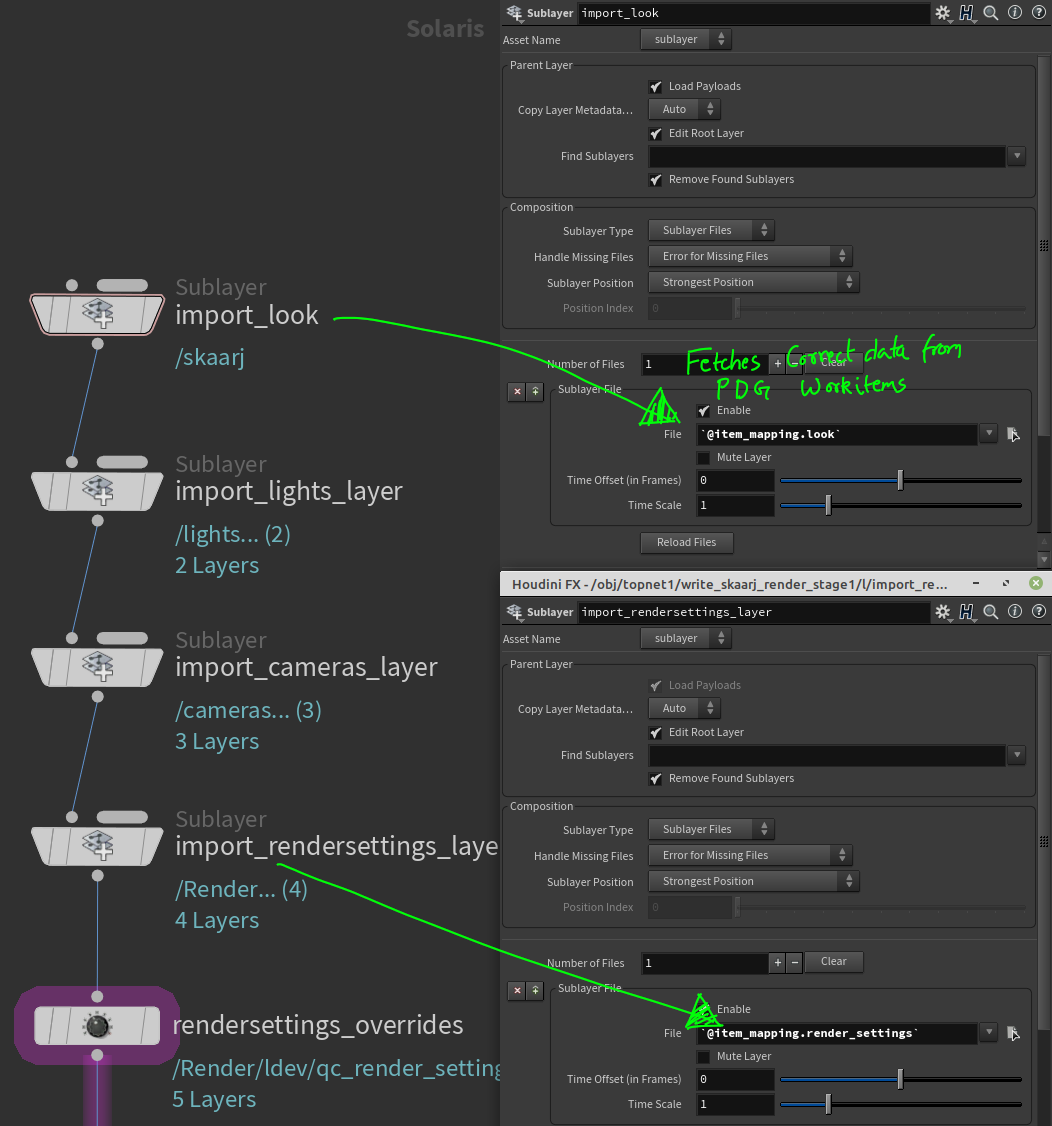

The next step is to integrate legos into Solaris/Light Operators (LOPs). This would allow artists to simply load the Packages into LOPs and start working without any unnecessory marshalling of data (as can be seen Figure 8, I am wrangling data back from TOPs to get the various assets inside LOPs context).

Following screenshot shows the LOPs network which was used to render out the final QC render image.

I believe having a tight integration with Houdini’s TOPs and LOPs is quite important for me as Houdini is my primary driver. Additionally, another one of the many todo tasks is to implement a shotbuilding tool for Houdini, which would pull the published data from the database and build a shot ready for the artist to work on (this ranks lower than LOPs integration since I can already pull in the Packages in TOPs which almost acts like a shotbuild).

However, since I am the guy who will be using these tools, I can choose to put it off in favour of writing an Asset Browser first. One thing which has to be implemented to go with an AMS is an asset browser. An AMS cannot be exploited to the fullest if you are unable to browse it in a meaningful way.

I will not pretend to be a database expert, truth be told, this was my first encounter with database modeling. I first tried out MySQL since its fairly straight-forward to use. After a few attempts, I realised I was having a really hard time getting a good grasp of how to best organise the tables in the database for optimal performance. I had spent a lot of time and effort to design the object models for legos and deconstructing them into a bunch of tables just didn’t feel right.

Spending a few days researching databases, I came across Neo4j a Graph database which is also open-sourced (community edition). Diving deeper into Neo4j, I was able to translate the object models I had for legos into the database models.

Anyone who has used Neo4j, will probably not be able to stop talking about Cypher, its query language. And with good reason! Cypher really made querying and playing around with databases enjoyable for me.

legos leverages Neo4j’s graph database technology which allows it to execute complex queries and also run analytics using graph algorithms effortlessly.

One of the biggest challenges I faced was versioning of assets/entities in the database. In a traditional database, it would probably be just another entry in a bunch of tables. Its not so straight forward in Neo4j, since a new asset version can have a new set of dependencies (in addition to older dependencies). This means we not only need to version nodes but subgraphs themselves (nodes and connected dependencies etc). After doing some more research, I was able to implement versioning with slight modifications to the object models and schemas. This however is beyond the scope of this article.

Following image shows a bare-bones example of a schema (complete schema of legos will be quite complex to go over in this article). Though a schema is not required in Neo4j I find it helpful as a visual aid and an organisational tool.

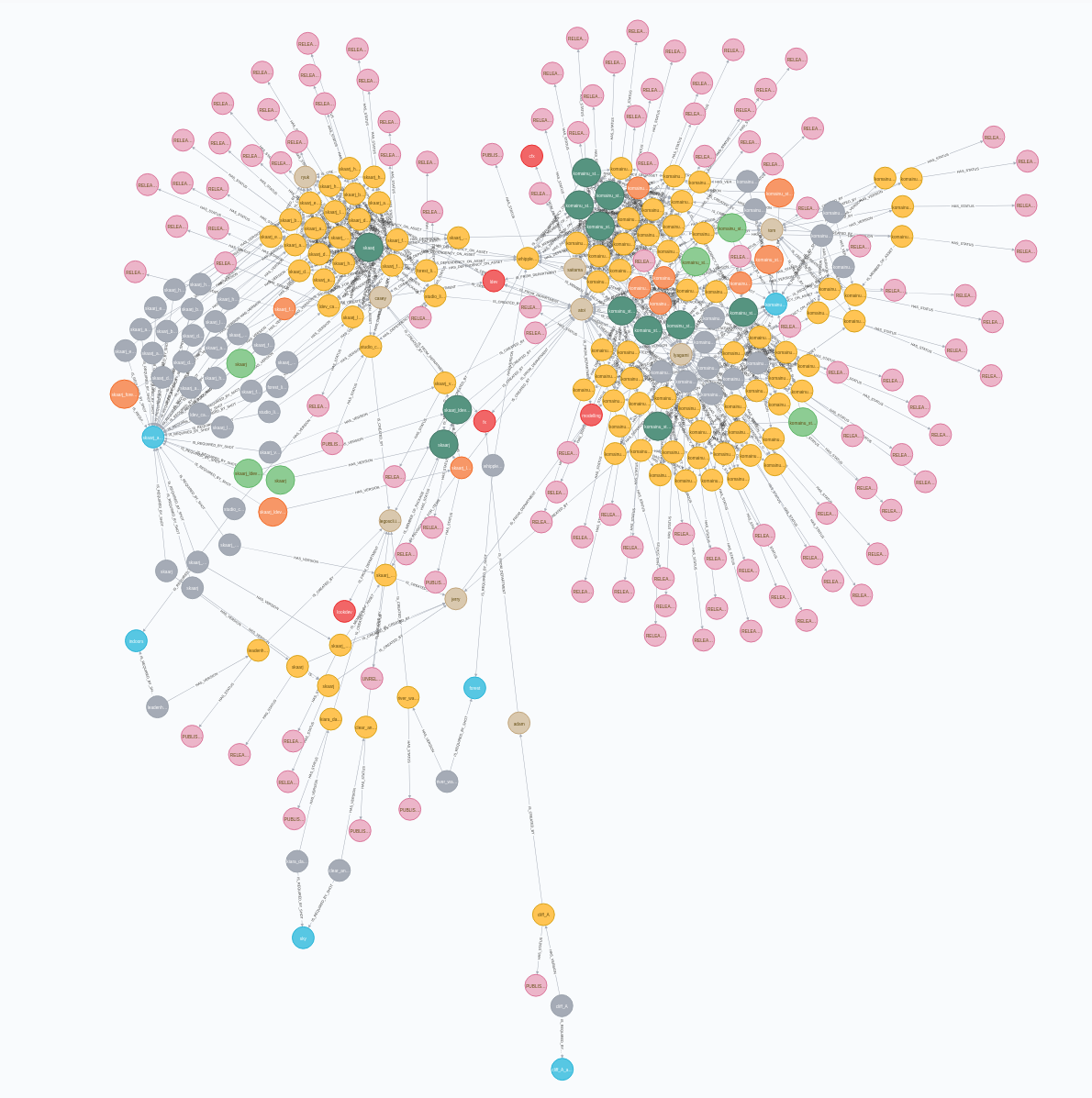

Following screenshot shows slightly larger part of the database. This is where Neo4j actually starts to shine as a database tool. The visual information that we can get by simply observing the graph lets us quickly identify patterns and visually inspect the database! You can see in the image below (Figure 10), most of the asset nodes are clustered around different Users since they are generating a lot of assets. Additionally most of the assets are also clustered around Packages which are referencing these assets. You can then run queries which are simply not possible out of the box (or will take quite a lot of python) in other databases such as:

Other than Houdini, I intend to get basic Blender integration for the first beta release of legos. Blender has improved massively to a point where newer studios will start looking at it favourably. Older, already established studios are usually slow to pick up new DCCs just because it might be more work to introduce a new application into the pipeline than the benefits they expect to get from it.

Additionally Gaffer integration would be pretty cool too. Gaffer is open source and most importantly a node based DCC. I have a strong aversion towards non-node based applications (I know Blender too is a non node based DCC, but its free, has EEVEE and good python support, which makes for a compelling argument).

This year has kept me very busy. There were moments when I would wonder if this project will ever finish. Writing an Asset Management System is quite an undertaking which is one of the reasons the smaller studios tend to overlook the benefits it brings to the table. Its not that they are not aware of it, it just takes time and resources to develop.

There is still a lot of work to be done in expanding the ancilliary tooling for legos. I am looking forward to implementing a feature rich Asset Browser, an application for production analytics as well as continue integration of legos into Houdini, Blender and Gaffer. The new year looks very promising indeed!

I hope this inspires you to think about how you can benefit from an AMS and also whets your appetite for graph based databases.